Prefer to read this later?

(We get it - it's a lot to take in.) Just grab a copy as a printable PDF. Simply enter your email and we'll send it to you now!

In today’s highly connected IT world, prolonged downtime can be a manufacturing company’s nightmare. Whether it’s downtime from a natural disaster, or resulting from human error, the impact of an outage can ripple throughout every aspect of your business, threatening operations, accruing high costs, creating negative customer experiences – and even putting business reputation at stake, as we’ve seen with some highly publicized large-scale outages in recent years.

It’s no surprise, then, that disaster recovery (DR) has become a top consideration for manufacturing companies around the globe. Yet while many businesses are aware of the concerning implications of NOT having a DR plan in place, there’s still some confusion around what truly constitutes a sound DR plan. Common misconceptions, many of which are related to understanding your downtime tolerance, can create a false sense of security for business owners who believe they have a sufficient plan in place when in reality it is exposing them to risk.

In this blog, we’ll lay out everything you need to know, starting with a DR marketplace overview and ending with specific steps and considerations you can take to create a DR plan that’s a good fit for your unique business. We’ll highlight some major pitfalls along the way that every organization (particularly those running Power i systems) needs to be aware of, starting with the most basic: what constitutes a disaster.

What Constitutes a Disaster?

Thinking about “disasters” may conjure up images of fires, floods, storms, explosions, earthquakes, major power outages and the like. Yet too often we think of disasters as large-scale events, a misconception that can lead to complacency or an attitude of “it probably won’t happen to my business.”

While these types of natural occurrences are certainly a factor, disasters can also be characterized by seemingly benign events that in turn lead to business disruptions. This includes air conditioner leaks, overheated server spaces, a blown boiler, mold and other physical issues.

Additionally, hardware failures, software failures and human errors can always rear their heads, even among the most well-prepared IT teams. They may not cause major disruptions if your IBM i system health is in check, but for many organizations a variety of business processes depend on the continuity of small, seemingly insignificant, hard-to-troubleshoot add-ons.

Whatever the scale of the event of disaster, downtime is downtime—and failure to have an adequate DR plan in place threatens your business’s ability to keep ticking along as it should. Essentially, the need to maintain business continuity is the driving force behind disaster recovery, a critical point to keep in mind as you approach building out a DR plan.

More SMBs Embracing DR

As the need for DR grows in importance, many SMBs who may have once dismissed it are now jumping on board. Cloud technology has played a big role in this, which we’ll cover in the next section. The need for DR is also driven by the competitive pressures of today’s manufacturing marketplace. Regardless of size, no company can afford to be the weak link in a highly connected chain of customers and suppliers. And when unplanned downtime does occur, it’s often small businesses who have the hardest time recovering. Consider the following unsettling statistics for SMBs:

The Adoption of Cloud Technology

It wasn’t too long ago that the adoption of cloud technology was characterized by a cautious wait-and-see attitude, rife with perceived risks and unknowns. Today, of course, there’s been a dramatic shift in that attitude. Manufacturing companies are quick to embrace all things cloud, viewing “as-a-service” technologies as the most viable and secure option for their needs—and Disaster Recovery as a Service (DRaaS) is no exception.

DRaaS has exploded into the market in the last few years, breaking down many of the traditional barriers companies once faced when considering a DR plan, such as lack of budget and lack of resources. DRaaS has opened the doors for SMBs everywhere, making DR implementation easier, more accessible and often more cost-effective. DRaaS solutions essentially eliminate the need for large cash outlays for equipment, and they can be implemented quickly and without need for complex IT infrastructure. They can also promise ready-to-go communication to multiple locations, faster testing times and fewer personnel requirements, as compared to managing everything in-house.

The Global Growth of DRaaS in Manufacturing

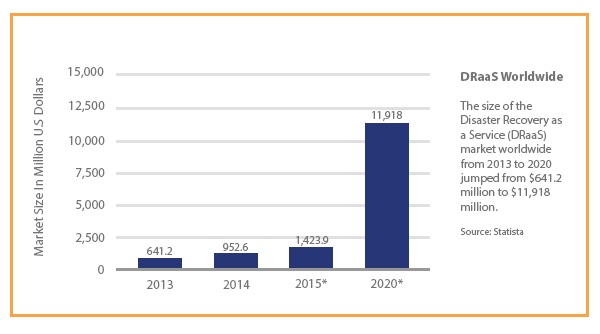

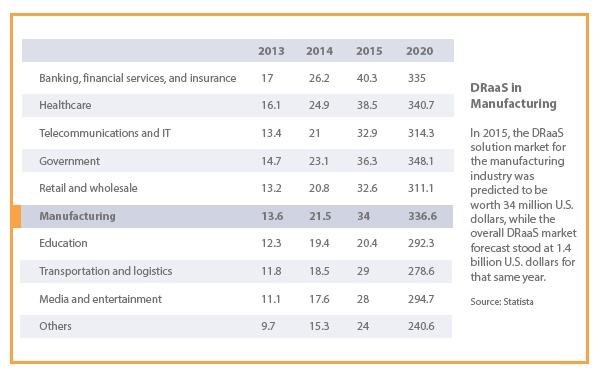

While some organizations still have success in implementing and managing their DR efforts in house, or with a “DIY” approach, the greater trend for manufacturing companies is using a DRaaS solution for at least a subset of their DR needs. (Related: Still Not Sold on the Advantages of Cloud Solutions for IBM Power Systems? Keep Reading.) In fact, DRaaS is among the fastest growing cloud-based service in the market today. Here’s a closer look at how that’s playing out in the manufacturing sector:

A Shifting Mindset

Along with the widespread acceptance of cloud solutions, the fast-growing DRaaS market can also be attributed to a more strategic mindset in how manufacturing businesses are thinking about their IT environments. DR was once a question of “What will it cost me to recover and rebuild?” Today, the notion of DR has evolved to reflect a more proactive mentality, with businesses now asking, “What’s it going to cost me if I CAN’T recover my systems?” and “What’s the true cost of that disruption to my customers and my business?”

Here again, this changing mentality is a result of a highly competitive—and highly intertwined—supply chain. Our disasters are shared; the downstream effect of an outage requires more focus and proactive planning than ever before. As technology advances, players across the board expect more and have less tolerance for disruptions. The best partners are the ones who can fulfill your orders reliably, making 24/7/365 availability the new, inexorable standard.

There’s much to think about when it comes to implementing DR for your company, so in this section, we’ll break down some of the key terms, elements and pitfalls that surround a DR program.

DR and High Availability (HA)

While they often go hand in hand, it’s important to make the distinction between DR and High Availability (HA). By definition, disaster recovery is an area of security that aims to protect and recover your IT infrastructure in the event of a human or natural disaster.

A DR program, then, is a pre-planned, documented approach of policies and procedures. It ensures business continuity in the face of disruption for your IT infrastructure that supports your mission-critical business systems.

High Availability refers to the technology needed to minimize IT disruptions by ensuring continuity when critical parts of your environment are not available. HA solutions minimize downtime and data loss through replication: data is replicated on an environment that exists in a separate geographic location. With an HA solution, as changes are made on a production system, they are replicated on a back-up system in near-real-time. That means, if something were to happen to your production system, such as a fire or flood, an HA solution would be able to initiate a “failover” where your HA system assumes the role of your production system.

Together, HA/DR are critical components that ensure data integrity and business continuity around the clock.

|

Read more about how High Availability fits into your IBM i Disaster Recovery plan – check out this related article. |

Recovery Point Objective & Recovery Time Objective

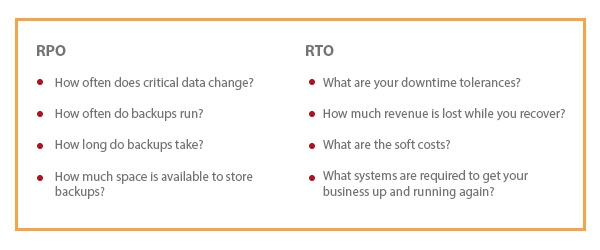

How long can a manufacturing company’s system be down before your business is seriously impacted? Recovery time objective (RTO) is an organization’s best measure of this. By definition, RTO is the desired duration of time within which a business process must be restored after a disruption to avoid unacceptable consequences to business continuity. In general, the shorter your RTO, the more robust your DR plan will need to be.

In the event of a disaster that leads to downtime, systems designers will aim to revert to a recovery point objective (RPO), which is the point in time you’d like to go back to and retrieve your data. In the most simplified terms, if your business had a disaster two hours ago, your desired RPO would be the moments that were immediately prior to that two-hour mark.

Here are some key factors that organizations determine an optimal RTO and RPO:

These questions demand a careful, coordinated approach – and it’s critical to note that this is not just a task for your IT team. Your approach to DR should involve key business stakeholders throughout your manufacturing firm. (In the section that follows, How to Create a Top-Down DR Plan, we’ll take a deep drive on how to effectively do this.)

Keep In Mind These RTO Pitfalls

With RTO and RPO serving as major components of DR, manufacturing companies should be aware of a mistake that we see time and time again: in general, RTO estimates are too high.

Why? Too often companies approach RTO from an internal perspective. Businesses leaders assess their tolerance for downtime based on what’s happening “inside their own walls,” looking to their company’s systems, critical applications and internal processes to arrive at an estimate on what their tolerance is. What’s missing from that approach is customers. Customer impact and customer attrition are critical parts of this equation that are often overlooked.

When thinking about RTO, ask the following questions and you’re likely to realize your tolerance for downtime is far less.

Again, by creating a top-down DR plan that starts with your business requirements—not your applications—your company can gain a more reasonable and comprehensive understanding of downtime tolerances. Fortunately, we’ve seen the needle move in the right direction in general, with more manufacturing companies recognizing the importance of realistic RTO assessments.

In a recent survey conducted by Information Technology Intelligence Corp (ITIC), 72 percent of respondents consider 99.99 percent to be the minimum acceptable level of reliability for their main line of business servers, up from 49 percent in 2014.

Cloud Backups and Tape Backups

While backups play an important role in recording your data, essentially creating “pulse points” in time to which you can go back and retrieve data, make no mistake: backups alone do not constitute a DR plan. They will help you recover data up until the moment the disaster occurs, but they do little to cover you during and after downtime. A HA/DRaaS, on the other hand, entails a far more comprehensive approach, ensuring business continuity before, during and after a disaster. It keeps mission-critical applications and business processes running smoothly until the production system is repaired and back online through real-time data replication—something your company cannot achieve with backups alone.

Cloud Backups

Many organizations find that backing up data to the cloud is an easy and attainable option, making cloud-based backups (also referred to as backup as a service or BaaS) yet another fast-growing trend in cloud technologies. For companies considering this option, it’s important to think about not just the back-up process, but the recovery process as well. Be sure to ask potential vendors what the back-up and recovery sets look like. Does the provider solution offer fast restore times? And are those restore times guaranteed? Vendor credibility is extremely important—so as you would with any cloud provider, be sure you do your homework during the selection process.

Tape Backups

Of all the pitfalls to avoid in your approach to DR, the reliance on tape back-ups is arguably the most concerning. Why? In the critical moments following an unplanned outage, recovered tapes are found to not work at all in roughly 30% of cases. Here are some additional findings from a global study that show how a surprising number of IT administrators are still struggling with backup tapes for their legacy data:

This leads to a scenario we’ve seen unravel all too often: a disaster occurs and a manufacturing business find that it needs to restore, say, its Power i machine. In that moment, IT managers discover that even the best-case scenario is dismal: to obtain backup tapes from off-site storage, obtain a new Power i machine, reconfigure it, then restore it through a tape back-up can take up to four days. And remember, a disconcerting number of tapes are found not to work at all. (Read more at 3 Major Pitfalls to Avoid With Your IBM i Disaster Recovery Plan.)

To make matters worse, starting from the point in which the downtime occurred, companies find they don’t have a viable way to go back and re-enter into their system all those unaccounted-for transactions. When you think about the number of transactions that occur even within one hour, let alone half a day, the magnitude of this problem becomes overwhelming.

In this section, we’ll offer strategies to help create a DR that’s sufficient for your specific manufacturing organization. Remember, a DR plan is essentially a prioritized set of policies and procedures that will enable you to continually do business in the event of a disaster or system outage. A “top-down” DR program then, as we’re referring to here, should start with an assessment of your business requirements. It should ultimately yield a matrix of business applications showing downtime tolerances, solutions and associated costs on a per-application basis. The following section discusses each of these factors and how they all work together.

Downtime Assumptions

In the event of a disaster, which processes are most vital to your operations? How quickly will those supporting business applications need to be recovered? When thinking about DR, these questions are too often answered through assumptions. Business often believe 24-48 to be acceptable downtime for a given application—but rarely are those assumptions tested for accuracy.

|

Critical Tip: Ditch your downtime assumptions and keep an open mind! It’s nothing personal, but important to note that as business leaders approach DR from the top down, more often than not they will find their previous assumptions to be incorrect. |

With a strategic DR approach that includes the following steps, you can take a more realistic and strategic approach. And that starts with prioritizing your business processes.

1: Prioritize Business Processes

What are your mission-critical business processes? Which ones are the most important to keep your business going? For example, your “Tier 1” processes are those which cannot stop even for a short period of time, such as your customer-facing systems or order shipping systems. These processes are likely to require a mirroring solution that gives your company near-immediate recovery.

A Tier 2 process, on the other hand, is one that may not need to have immediate recovery. Why? Customers probably will not feel the impact right away and you can continue to do business when that process goes down. A Tier 3 business process, then, may be one that is important to employees and but is not essential to running your business… and so on and so forth. To assess your processes and assign these priorities in this way, you’ll need to do the following:

This analysis will essentially form the foundation of your DR plan and inform other criteria in your DR matrix, such as solutions and testing.

2: Assess Downtime Tolerances

At the same time you’re evaluating and prioritizing your business requirements, you will need to assign downtime tolerances for your business applications.

3: Factor in solutions and cost.

Given our integrated IT environments, downtime costs can be far reaching. Customers are not always patient. If your manufacturing firm is down for a few days, delayed business can mean lost business – and the revenue lost during that time must be considered in your DR plan.

Downtime costs, of course, extend beyond revenue lost. Be sure you factor in soft costs as well. For example, in the event you must revert to a manual process, what are the costs associated with having a less efficient workforce? Additionally, what costs are incurred not just during but after downtime? Ask questions such as:

It’s a good idea to note that determining the true cost of downtime is perhaps the most difficult aspect of disaster recovery. Downtime costs are unique to your specific business. They cannot be measured in a standardized way, nor is there a one-size-fits-all solution. Whether it’s a simple solution or a sophisticated one, a solution that’s driven by your business requirements is the best-fit choice for your organization.

4: Test Your Top-Down Design

Ultimately, your DR matrix rolls up each of these considerations into a DR plan that shows how each piece comes together: your business applications, each with an acceptable downtime tolerance, a solution and associated cost. Now it’s time to test through simulation. Testing, however, is another area of DR that’s commonly subject to pitfalls.

DR testing frequency is largely inadequate, with only about 40% of companies testing their DR plans once annually. 28% test their DR plan rarely, if ever.

Source: 2016 State of Disaster Recovery Survey

So where to most companies go wrong? Too often, applications are tested without considering connections and interdependencies, or the downstream/upstream effects. When considering a testing or back-up plan, many companies will start by looking at their core ERP systems, believing that if they have a plan to get their main systems up and running, they’ll be ok.

But as we know, business processes today rely on outside systems. From Windows servers in data centers, to cloud-based systems, to banking systems, to freight company systems… there are far more dependencies between interfaces and integrations than a cursory test (or a core system test) could ever account for. Instead, you can do the following:

Finally, keep in mind that failed tests are often documented but then nothing is done to correct them. Remember to be honest and keep an open mind about your results.

If you would like to take an even deeper dive on any of these steps, and learn how they have applied to other manufacturers, you can download our recorded webinar.

Once your organization has a good understanding of DR, from the big picture down to the nitty gritty details, the next question becomes: do I manage my DR efforts in-house or outsource to a provider? Or a combination of the two?

Most manufacturers will find that the decision does not have to be one or the other. A DIY approach to DR can take on many forms, and there are varying degrees of services you may or may not need to entrust to a third party. Key points of consideration include:

These are just some of the many factors that will influence your decision; and every manufacturing organization must look to its unique needs to find the best-fit approach to DR. That said, the trend we’re seeing today with “aaS” solutions—whether it’s for all or just a subset of DR needs—is impossible to ignore. The growing DRaaS market makes DR solutions more accessible and affordable for businesses large and small—and as that happens, solutions become more sophisticated and therefore better suited to protect you from incidents that threaten business continuity. In our tightly woven global network of manufacturers, customers and suppliers, the increased adoption of disaster recovery in good news all around.

Do You Have Questions We Didn’t Address? Ask Away!

Whatever phase you’re in with disaster recovery planning, your manufacturing organization can greatly benefit by partnering with a provider who has both industry-specific business experience in addition to technical expertise. PSGi partners with Thrive to bring you the full breadth of skills and knowledge. From your apps to your servers, we can help with everything from handling security and audit requirements for your DRaaS site, to assessing and prioritizing your business’s DR needs, to finding the right technical solutions (across any platform) that meet your unique criteria. If you have questions, we’re here to help—simply contact us or fill out the form below and let us know how we can get your DR plan on the right track.